Timeline

Oct. 25, 2021, 9 a.m. UTC

March 31, 2022, midnight UTC

Challenge

Background

The autonomous estimation of the pose (i.e., position and orientation) of a noncooperative spacecraft given camera images is a vital tool for various satellite servicing missions of scientific, economic, and societal benefits, such as RemoveDEBRIS by Surrey Space Centre, Restore-L by NASA, Phoenix program by DARPA, and several missions planned by new space start-ups such as Astroscale and Infinite Orbits. Pose estimation also enables debris removal technologies required for ensuring mankind’s continued access to space, refurbishment of expensive space assets, and the development of space depots to facilitate travel towards distant destinations.

The Next challenge in pose estimation

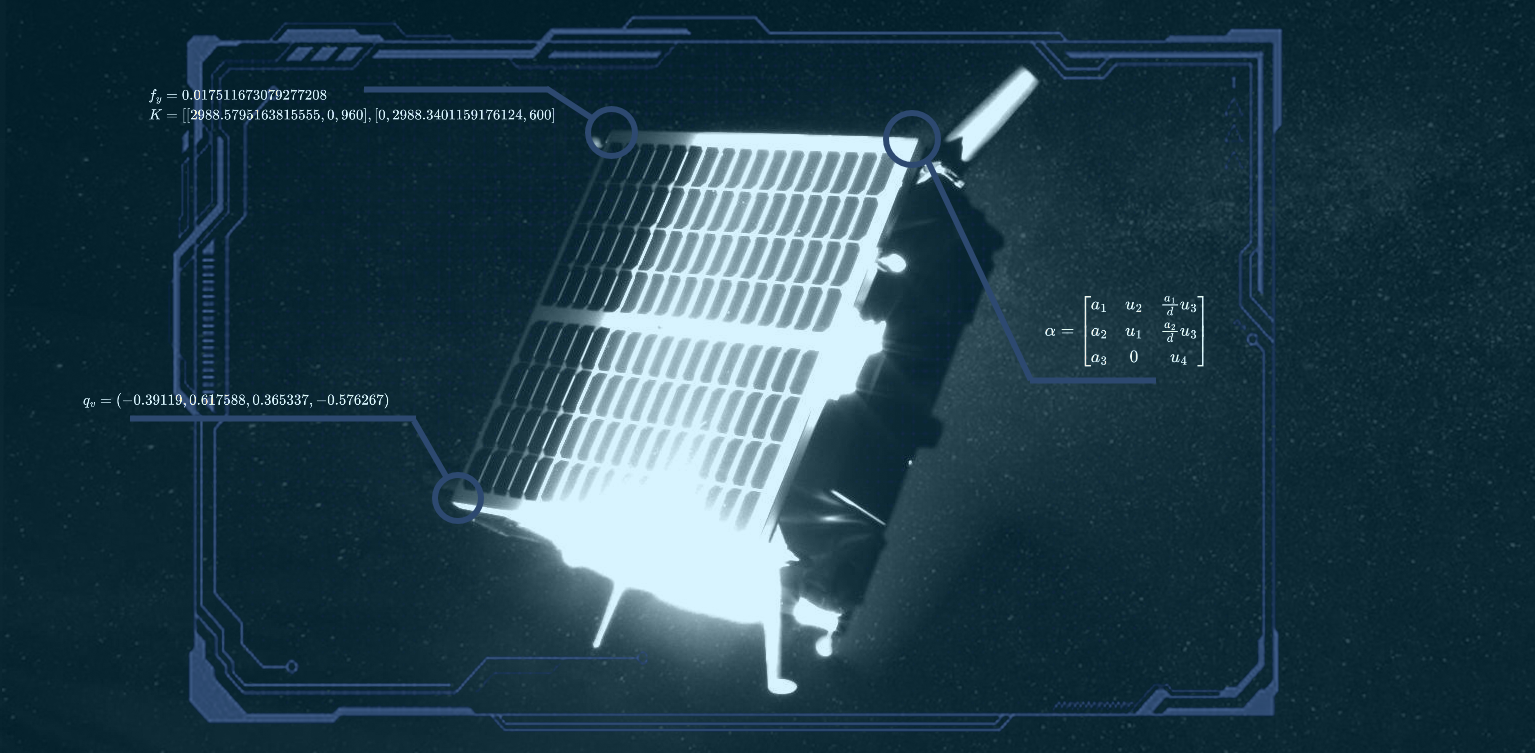

spacecraft in space. Image credit: OHB Sweden

In the previous Satellite Pose Estimation Competition (SPEC2019), the participants were tasked to estimate the pose of the Tango spacecraft from two types of imagery: synthetic images from computer graphics and real images from a robotic testbed. These two imageries constitute the first benchmark Spacecraft PosE Estimation Dataset (SPEED). While the winning team’s submission demonstrated superb performance on synthetic test images, there was also a general trend of performance gap when the submitted algorithms, trained exclusively on synthetic images, were tested on synthetic and real images. This observation triggers the next big question in developing a spaceborne computer vision algorithm: how can one validate on-ground the pose estimation algorithm on spaceborne images of the target that are unavailable prior to the mission? After all, unlike on Earth, autonomous driving in space prohibits habitual road tests and on-site debugging.

SPEED+

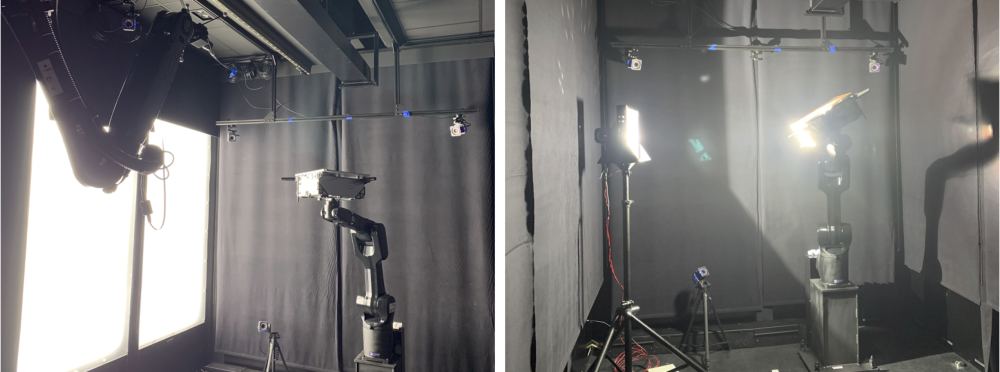

Unfortunately, the real test images of SPEED are limited in quantity and variation in environmental factors to enable comprehensive performance analysis. In response, we developed SPEED+, the next-generation dataset for spacecraft pose estimation with specific emphasis on domain gap. In addition to a new set of nearly 60,000 synthetic images, the dataset contains 9,531 Hardware-In-the-Loop (HIL) test images of the half-scale mockup model of the Tango spacecraft captured from the Testbed for Rendezvous and Optical Navigation (TRON) facility at Stanford’s Space Rendezvous Laboratory (SLAB).

The HIL images can be from either lightbox or sunlamp domains created with different sources of physical illumination (see image below). They have vastly different visual characteristics compared to the synthetic training images; therefore, they are considered as a weaker surrogate of the target spaceborne images.

Goal

The goal of this challenge (SPEC2021) is to estimate the pose of the Tango spacecraft in lightbox and sunlamp test images using the provided synthetic images and associated pose labels. While these test images would be normally unavailable to the mission developers prior to deployment, they are made available to the public in this challenge to promote community engagement and open up the possibility of applying a more variety of techniques.

References

[1] Park, T. H., Märtens, M., Lecuyer, G., Izzo, D., D’Amico, S. SPEED+: Next-Generation Dataset for Spacecraft Pose Estimation across Domain Gap. 2022 IEEE Aerospace Conference (AERO), 2022, pp. 1-15, doi:10.1109/AERO53065.2022.9843439.

[2] Park, T. H., Bosse, J., D’Amico, S. Robotic Testbed for Rendezvous and Optical Navigation: Multi-Source Calibration and Machine Learning Use Cases, 2021 AAS/AIAA Astrodynamics Specialist Conference, Big Sky, Virtual, August 9-11 (2021). [pdf]